For tech investors, 2025 began with a panic.

A hyper-efficient new AI model from Chinese developer DeepSeek made Wall Street question whether Big Tech companies and Silicon Valley start-ups were overspending on huge new data centres and expensive Nvidia chips to build AI apps such as ChatGPT.

Six months later, those worries look like a blip. The AI infrastructure-building frenzy has continued with a vengeance.

Microsoft, Alphabet, Amazon and Meta plan to increase their capital expenditures to more than $300bn in 2025. IT consultancy Gartner reckons a total of $475bn will be spent on data centres this year, up 42 per cent on 2024.

Some believe even that will not be enough to keep up with global demand for AI. McKinsey predicted in April that $5.2tn of investment in data centres would be required by 2030.

Earlier this month, Meta founder Mark Zuckerberg announced that his company was investing “hundreds of billions of dollars into compute to build superintelligence”, referencing attempts to surpass human cognition. The spending will go on data centre clusters — one with a footprint large enough to cover most of Manhattan. He is planning others, each named after the Titans of Greek mythology.

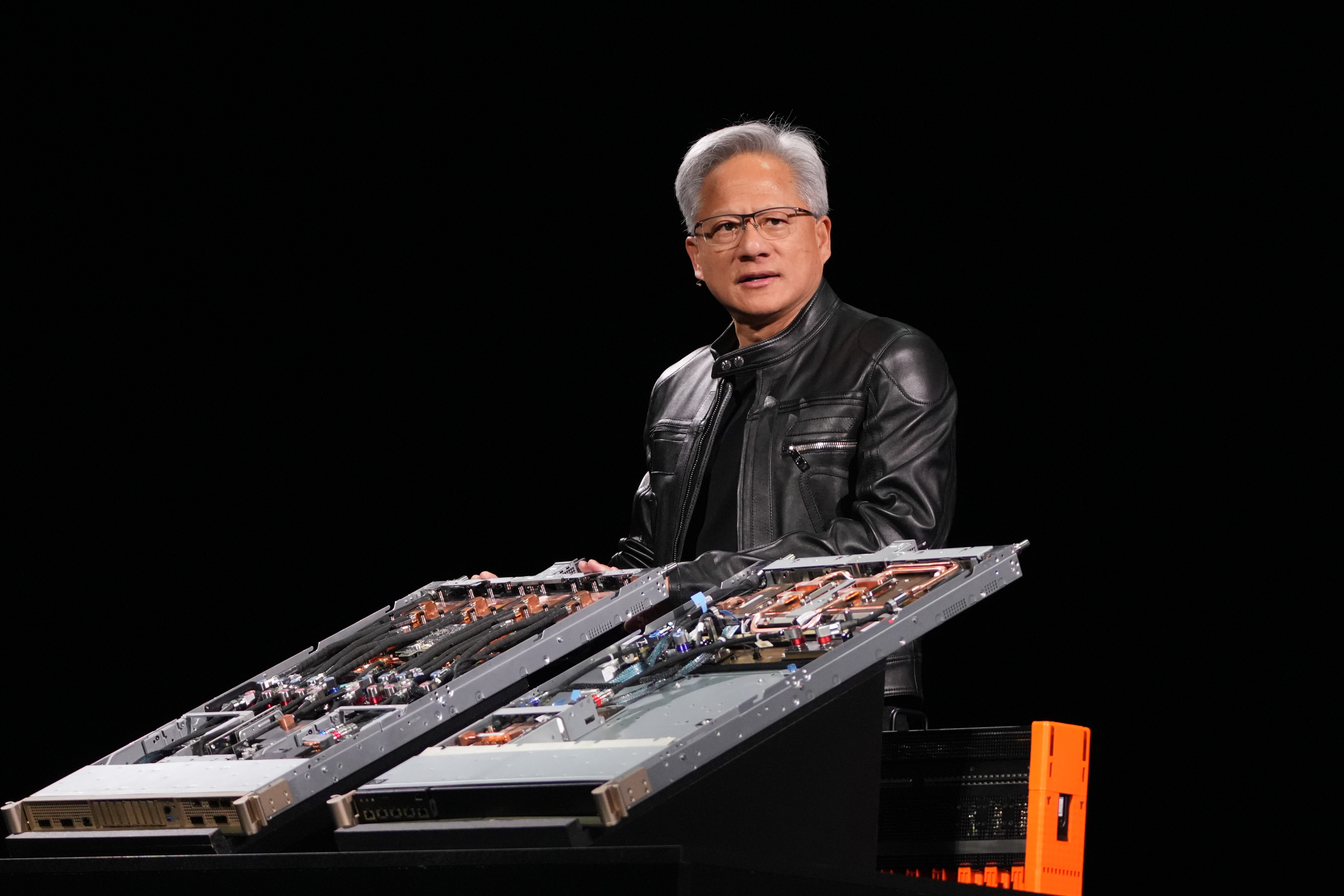

“I don’t know any company, industry [or] country who thinks that intelligence is optional — it’s essential infrastructure,” Jensen Huang, Nvidia’s chief executive, said in May. “We’re clearly in the beginning of the build-out of this infrastructure.”

© Mark Hertzberg/ZUMA Press Wire; Stephen Voss/Redux/eyevine; USA Today Network/Reuters; REUTERS/Daniel Cole

Yet building the facilities required to pursue this next frontier while also supporting billions of people using sophisticated AI models is far more complex, costly and energy-guzzling than the power grids, telecoms networks or even cloud computing systems that came before them.

Traditional data centres, such as those that have been built by Amazon and Microsoft, are “a proven model with proven returns and proven demand”, said Andy Lawrence, executive director of research at Uptime Institute, which inspects and rates data centres.

“To suddenly start building data centres which are so much denser in terms of power use, for which the chips cost 10 times as much, for which there is unproven demand and which eat all up available grid power and suitable real estate — all that is an extraordinary challenge and a gamble,” he said.

Grand designs

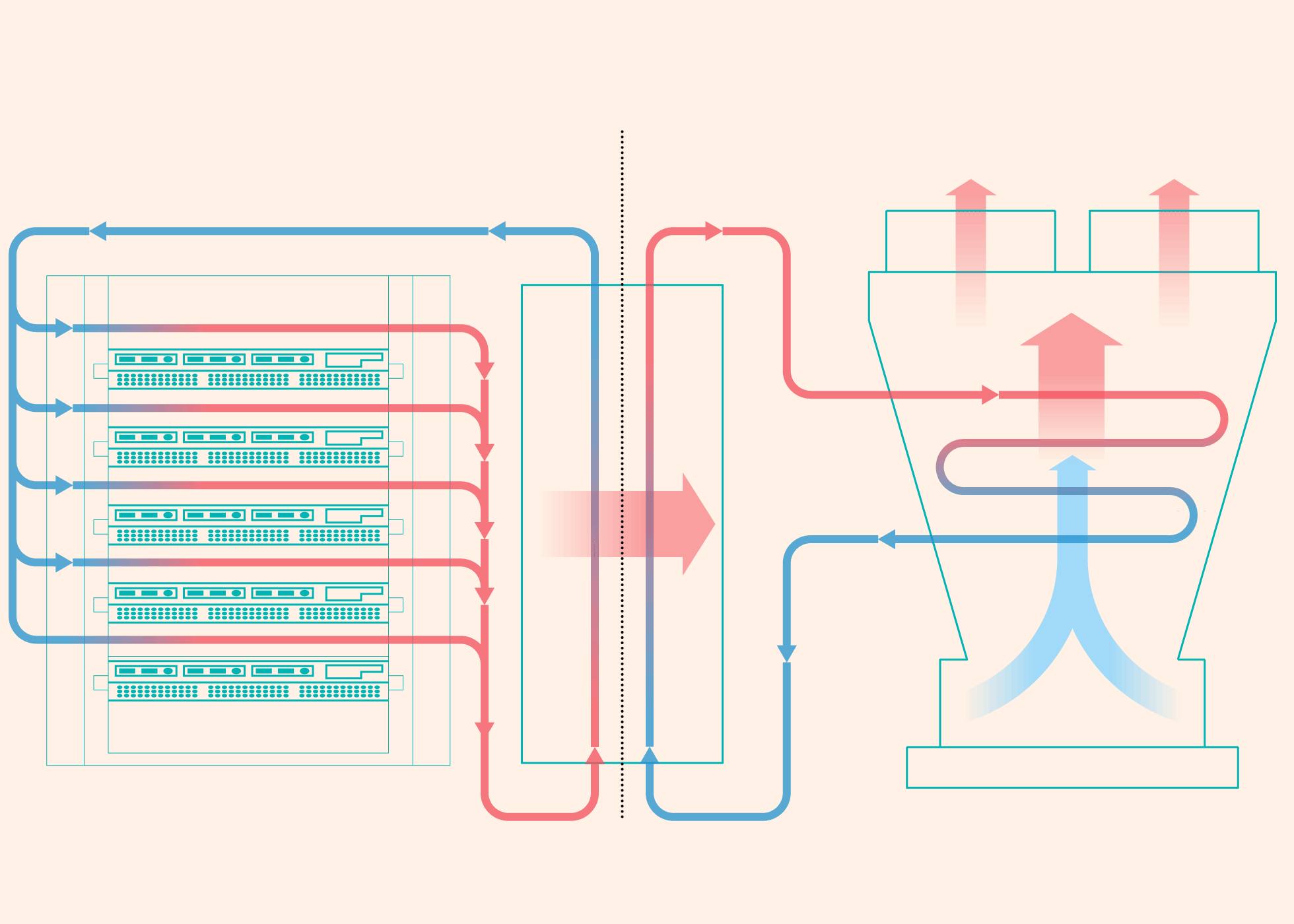

The dramatic increase in power demand from new AI chips has transformed data centre design.

Racks of computers running Nvidia’s chips consume at least 10 times as much power as regular web servers, and its latest processors give off so much heat that air conditioning is not enough to cool them.

“Everything has been turned upside down,” said Steven Carlini, vice-president of innovation and data center at Schneider Electric, a leading supplier of electrical infrastructure for data centres.

Conventional facilities were filled with rows of servers that took up most of the floorspace, but now “70 per cent of the footprint is outside of the IT room”, Carlini added, with the equipment that powers the chips and keeps them cool taking up a more significant role in building layouts.

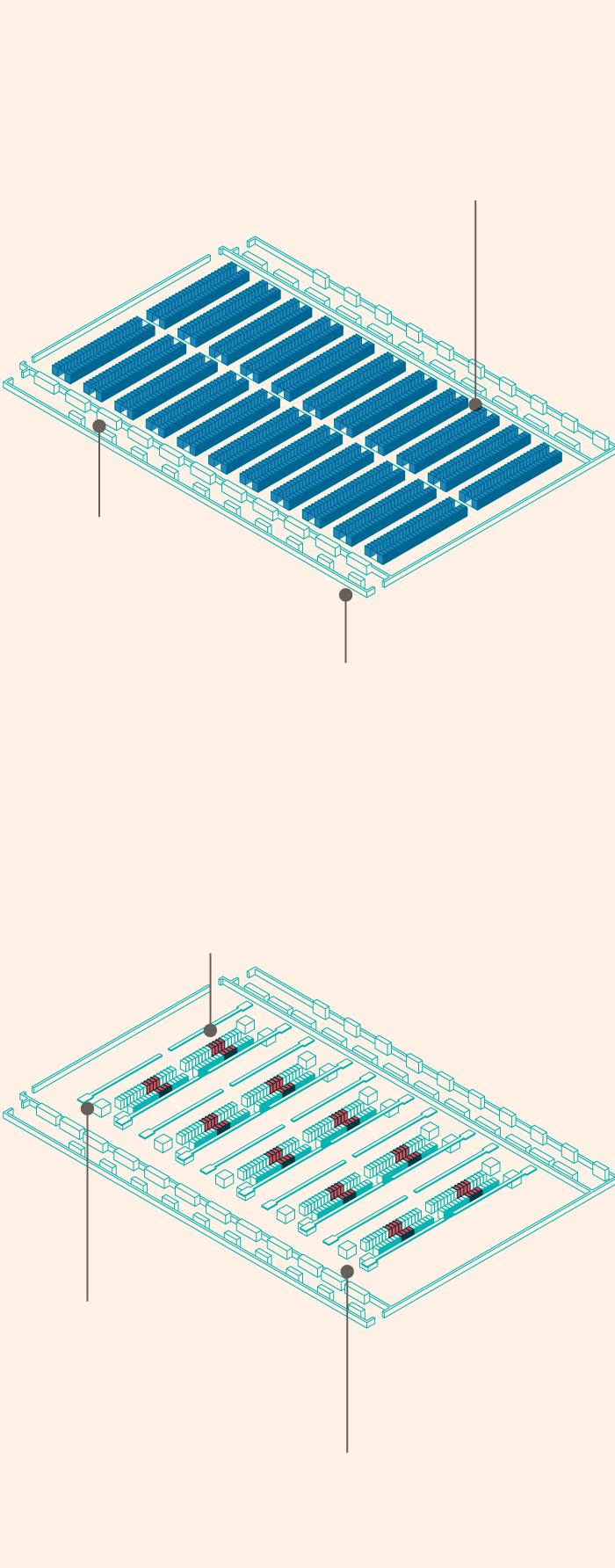

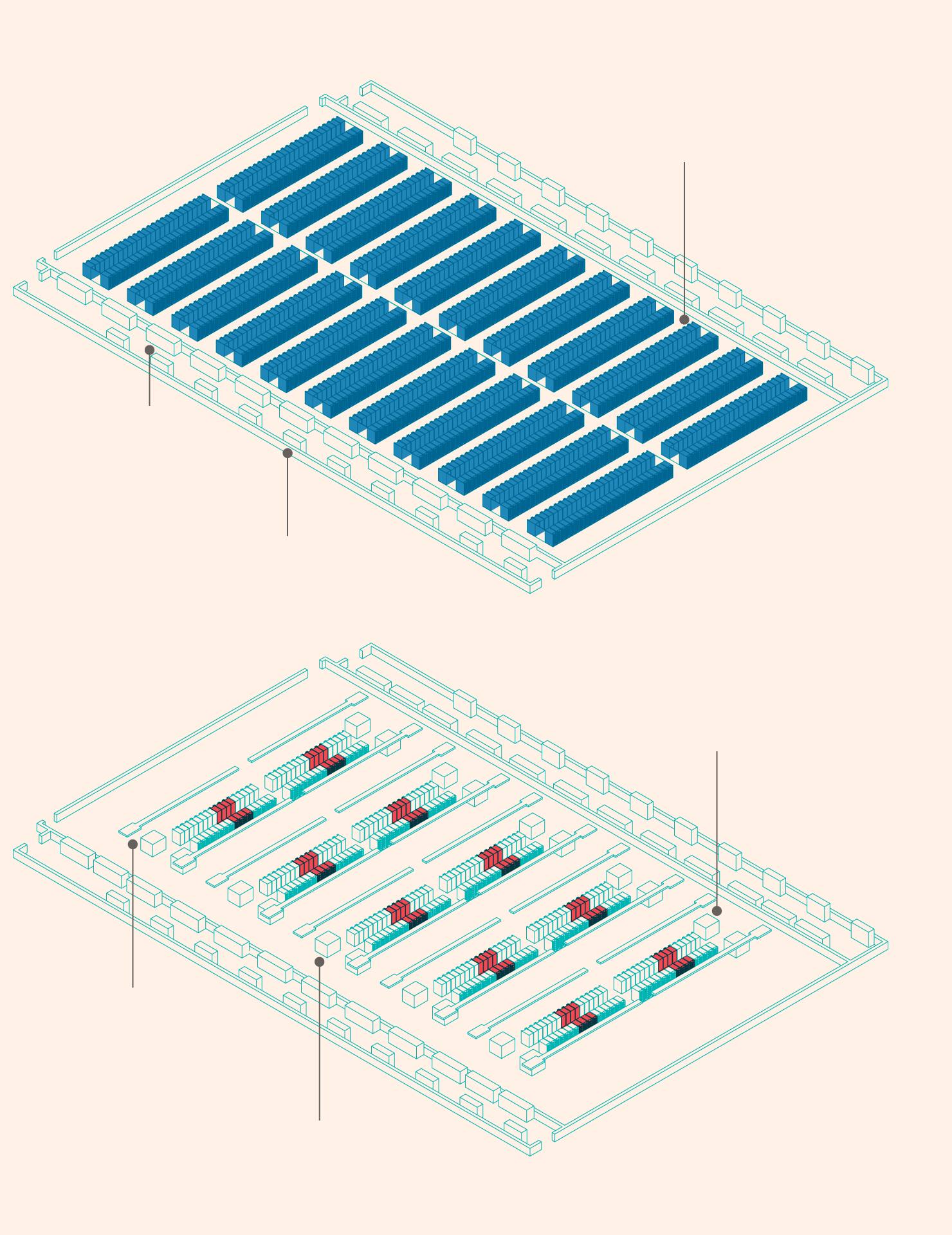

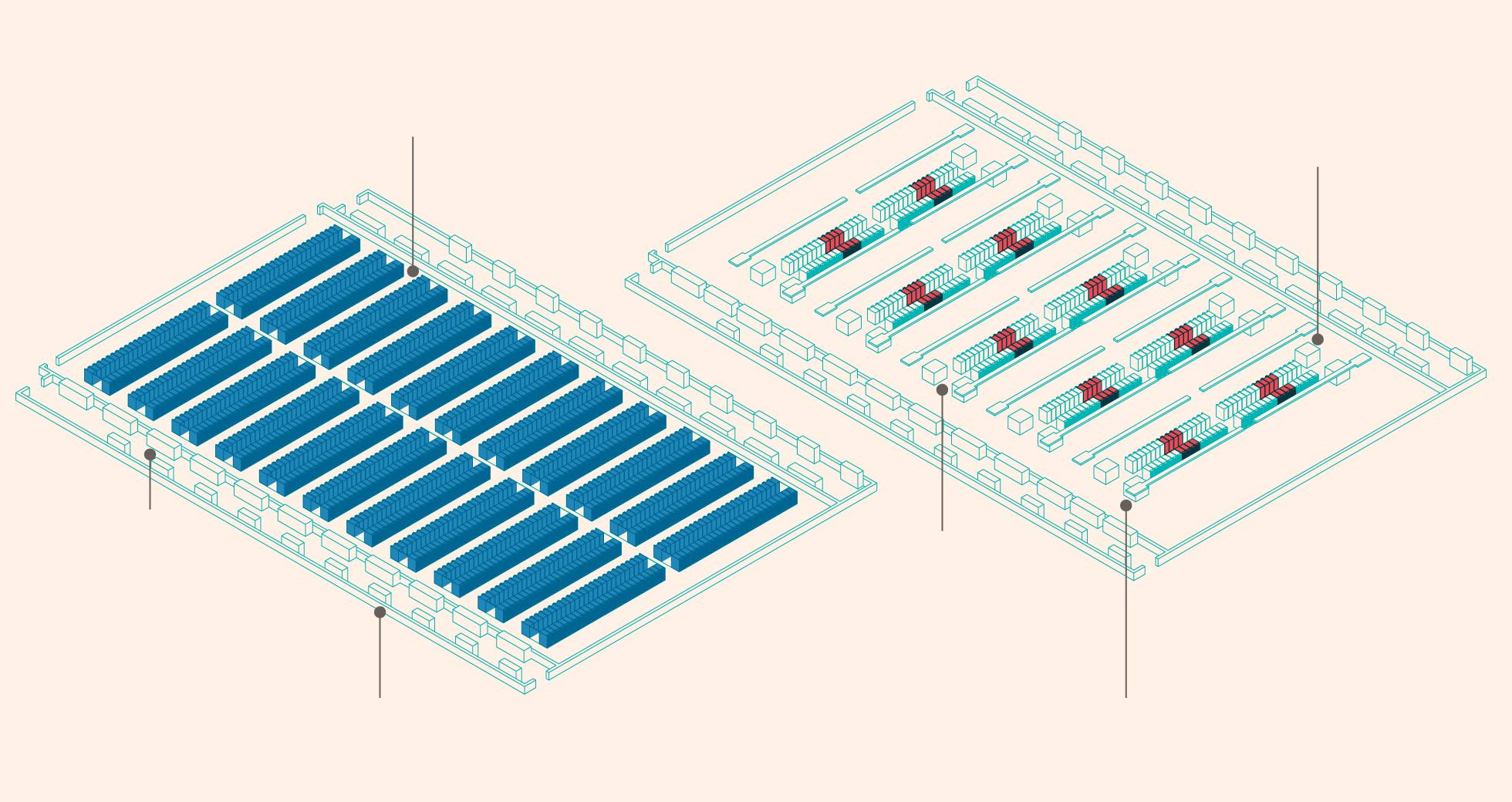

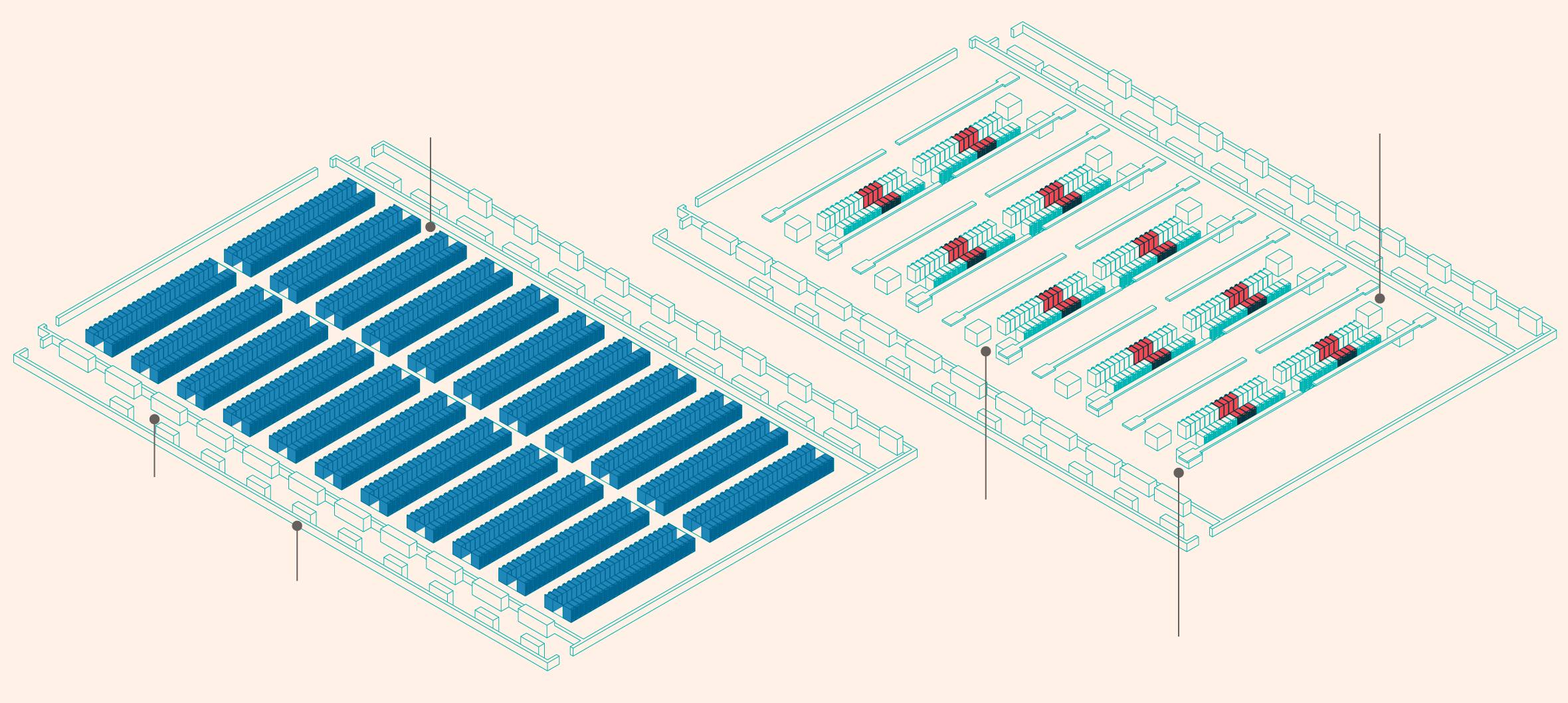

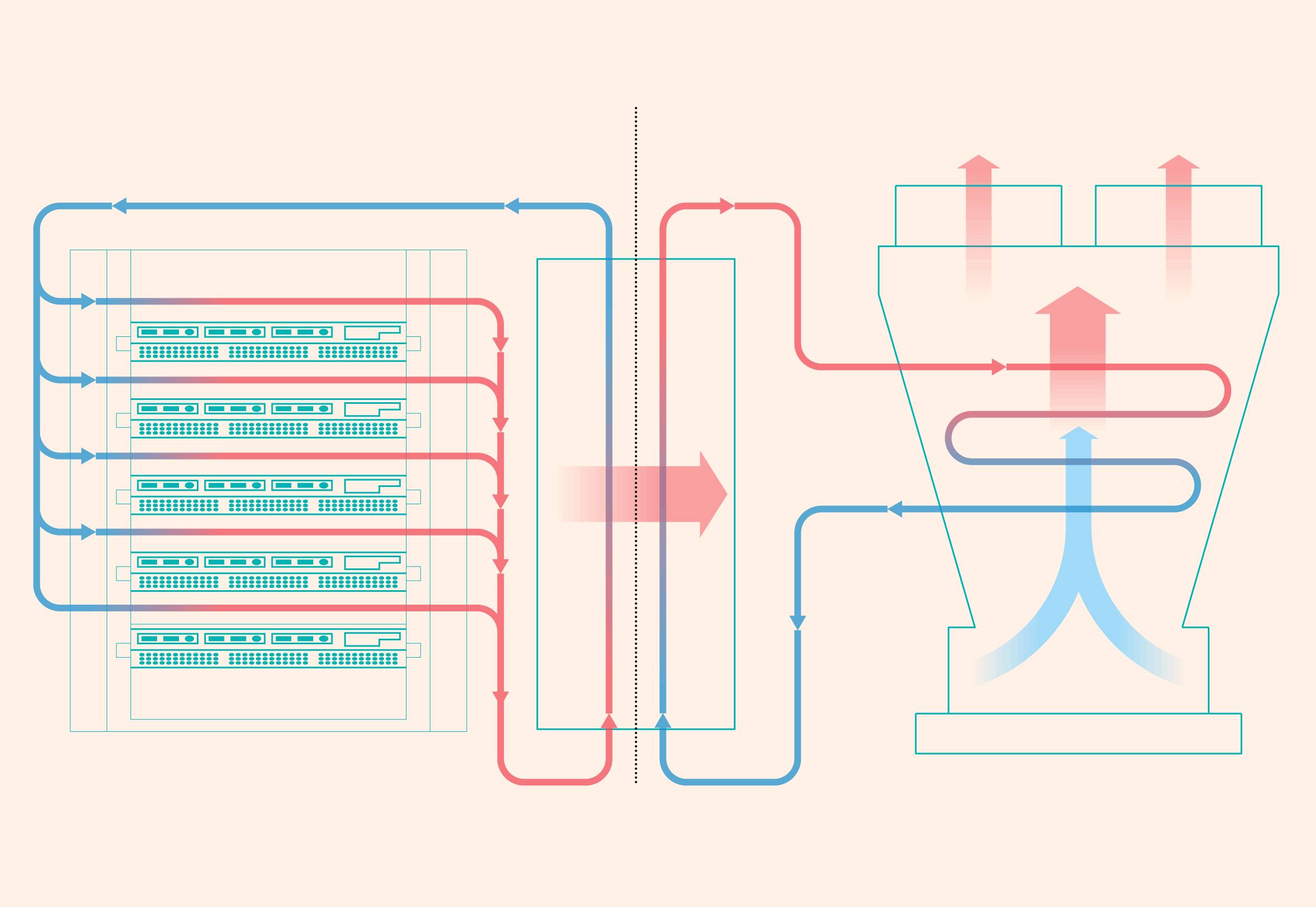

AI has transformed data centre design

Graphic: A side-by-side illustrated image showing the floor layout of a traditional data centre and an AI data centre. The traditional data centre includes many more racks packed closely together, while the AI data centre has fewer racks overall but more equipment for cooling and power distribution.

“Twenty years ago, a big data centre would have been 20 megawatt”, said Crusoe’s Lochmiller, referring to the amount of electricity required. “Today, a big data centre is a gigawatt or more . . . We believe we can build multi-gigawatt campuses.”

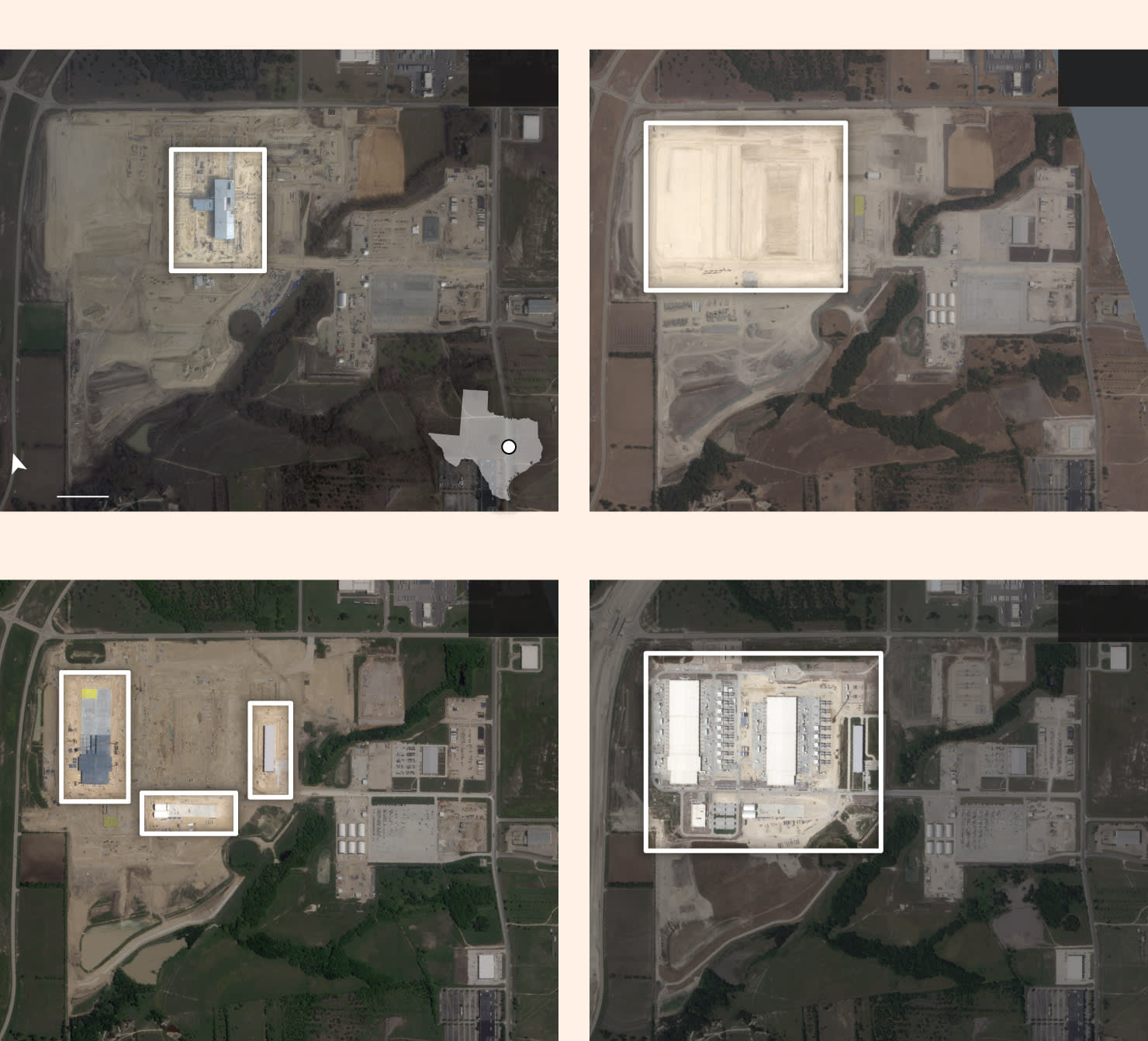

AI is reshaping how computing infrastructure is built so quickly that Meta ripped down a data centre that was still under development and redesigned it for higher-powered chips. Satellite imagery shows how in 2023, the creator of the Llama AI models knocked down building structures at a site in Texas before restarting construction as part of a wider overhaul of infrastructure strategy.

Behind this transformation lies a wholesale shift away from the kinds of chips that have for decades powered the vast majority of personal computers and servers. Most PCs in the 1990s and 2000s relied primarily on a central processing unit — the “Intel inside” — with an optional supporting cast of graphics accelerators and sound cards for playing games or making music. As cloud computing took off in the 2010s, Amazon Web Services and the infrastructure powering Google or Facebook’s internet services closely resembled those commodity CPU-based computers.

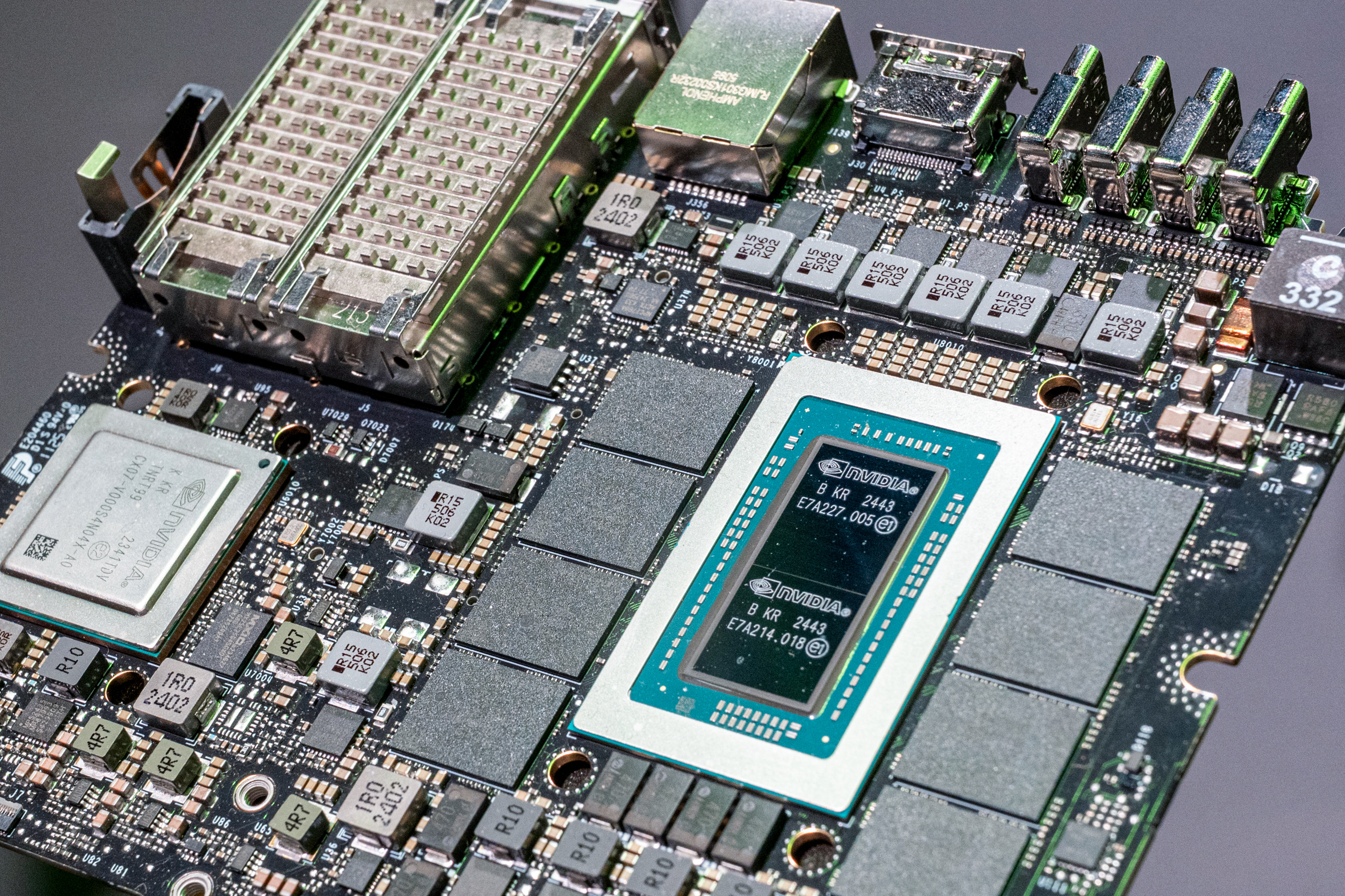

Today’s AI infrastructure is built around a descendent of the humble graphics card: what was once a mere passenger is now in the driving seat. Nvidia’s graphics processing units, or GPUs, have become the key component of a modern data centre thanks to their ability to process huge amounts of data “in parallel” — similar to how they made computer games run smoother.

CPU vs GPU

The ability of GPUs to perform parallel operations makes them ideal for the computing power needed to train and use large language models

The CPU will draw each pixel one-by-one/complete tasks in a sequential way

The GPU will split the image up into multiple pixels/complete tasks in a concurrent way

Nvidia executives have likened a CPU to reading a book one page at a time, while a GPU tears up the pages and charges through them all at once. While CPUs can handle a wide variety of tasks, GPUs are most suited to running the same type of calculation over and over again — ideal for training a huge AI model.

Clustering

The relentless quest for computing power is spurring a real estate boom driven by hyperscalers — large cloud providers including Amazon, Microsoft and Google — which are developing clusters of data centres so that hundreds of thousands of chips can work in concert to fine-tune AI models.

“Training has been really focused on mega clusters where you need as many GPUs and accelerators in as close a physical proximity as you can get,” said Kevin Miller, former vice-president of global data centres at Amazon Web Services. “The closer physical proximity reduces latency, which improves the speed [at which] you can train new models.”

The sheer number of chips being deployed by model builders including OpenAI and Anthropic has led hyperscalers and data centre operators, including QTS and Equinix, to hunt for large parcels of land that can host clusters.

A traditional data centre supports thousands of customers, with servers shared out as demand requires. But now many of these sites, which Huang has dubbed “AI factories”, are being built for a single company — or nation state — inverting the traditional model.

Crusoe is building eight data centre buildings, drawing a total of 1.2GW of capacity, in Abilene, Texas, for OpenAI. Oracle will provide about 400,000 Nvidia GPUs at a cost of roughly $40bn. Abilene is part of OpenAI’s proposed $100bn Stargate project, which will be financed largely by Japan’s SoftBank, to help meet some of its processing needs as it pursues superintelligence.

Social media giant Meta is building a 2GW facility in Richland, Louisiana, while Elon Musk’s xAI is targeting 1.2GW across several sites in Memphis, Tennessee. Amazon, meanwhile, is developing a 2.2GW site for Anthropic in New Carlisle, Indiana.

The head of an investment fund that backs Crusoe said the number of data centre construction projects had reached frenzied levels, with a “build it and they will come” mentality. The creation of these facilities in close proximity has a flywheel effect, with a trained local workforce able to repeat construction projects at nearby sites.

Access to cheap and plentiful energy has emerged as the key factor for determining site location with many operators power-constrained. “We very much prioritise energy and power first,” said Bobby Hollis, vice-president of energy at Microsoft.

A study from Oxford university found that close to 95 per cent of commercially available AI computing power is operated by US and Chinese tech groups.

China’s drive to expand its AI computing infrastructure and capitalise on the AI boom has spurred local governments, such as those in the remote regions of Xinjiang and Inner Mongolia, to construct data centres. After a détente between Beijing and Washington, Nvidia is set to resume shipments of AI chips to China. But the US still has export controls preventing the sale of the most powerful AI semiconductors to the Chinese market. The city of Johor Bahru in Malaysia is emerging as an AI hub on the back of demand from Chinese developers.

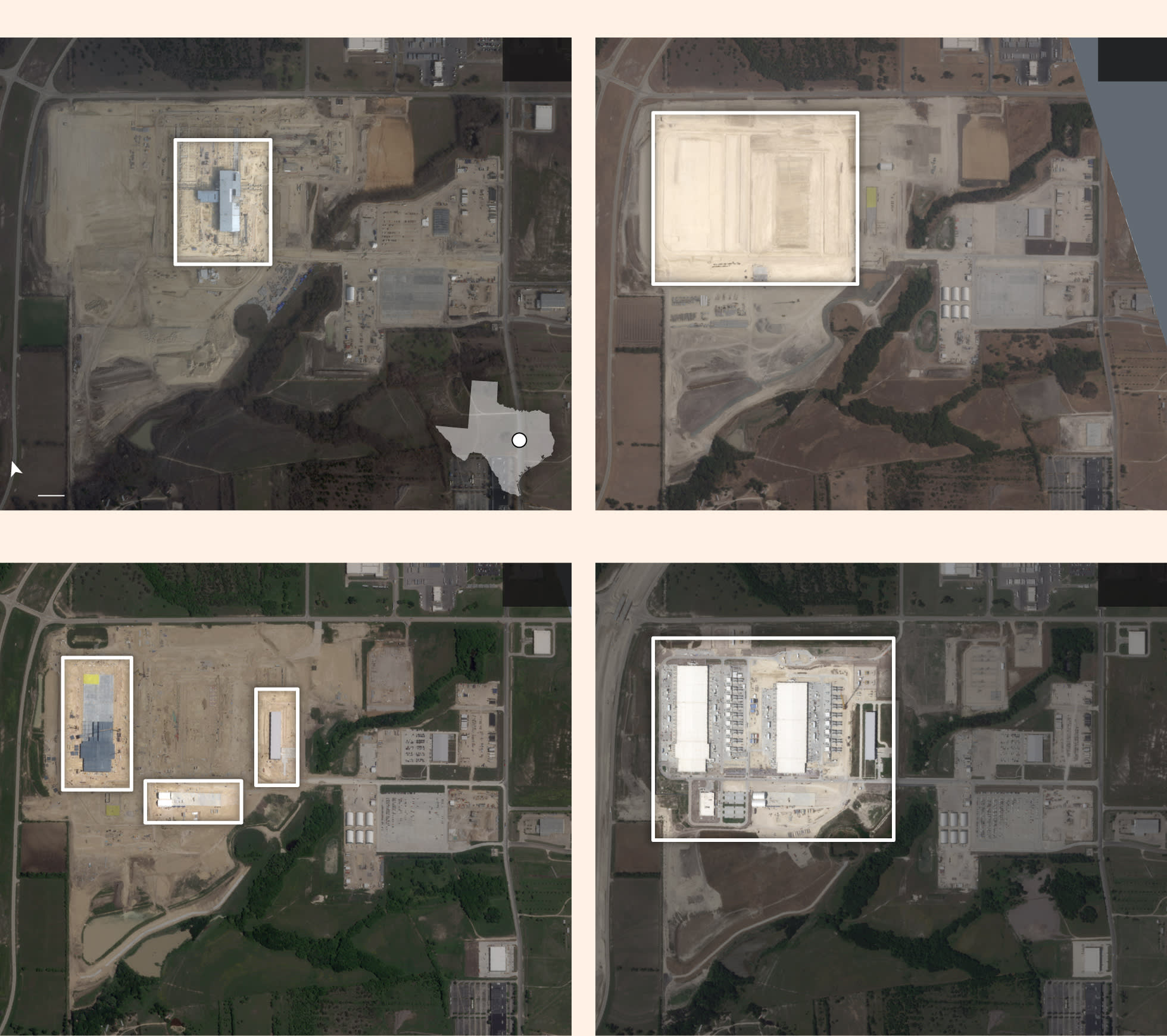

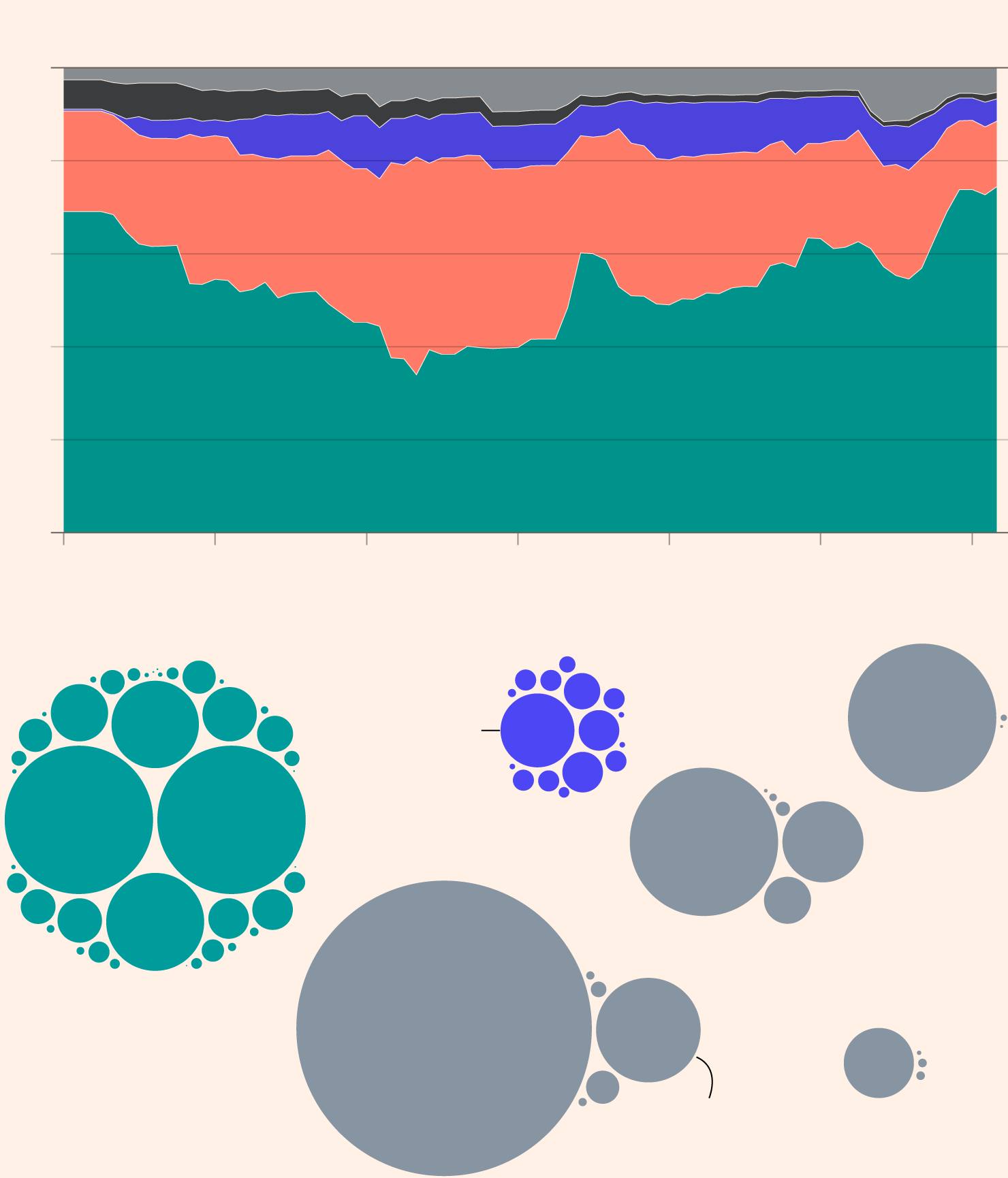

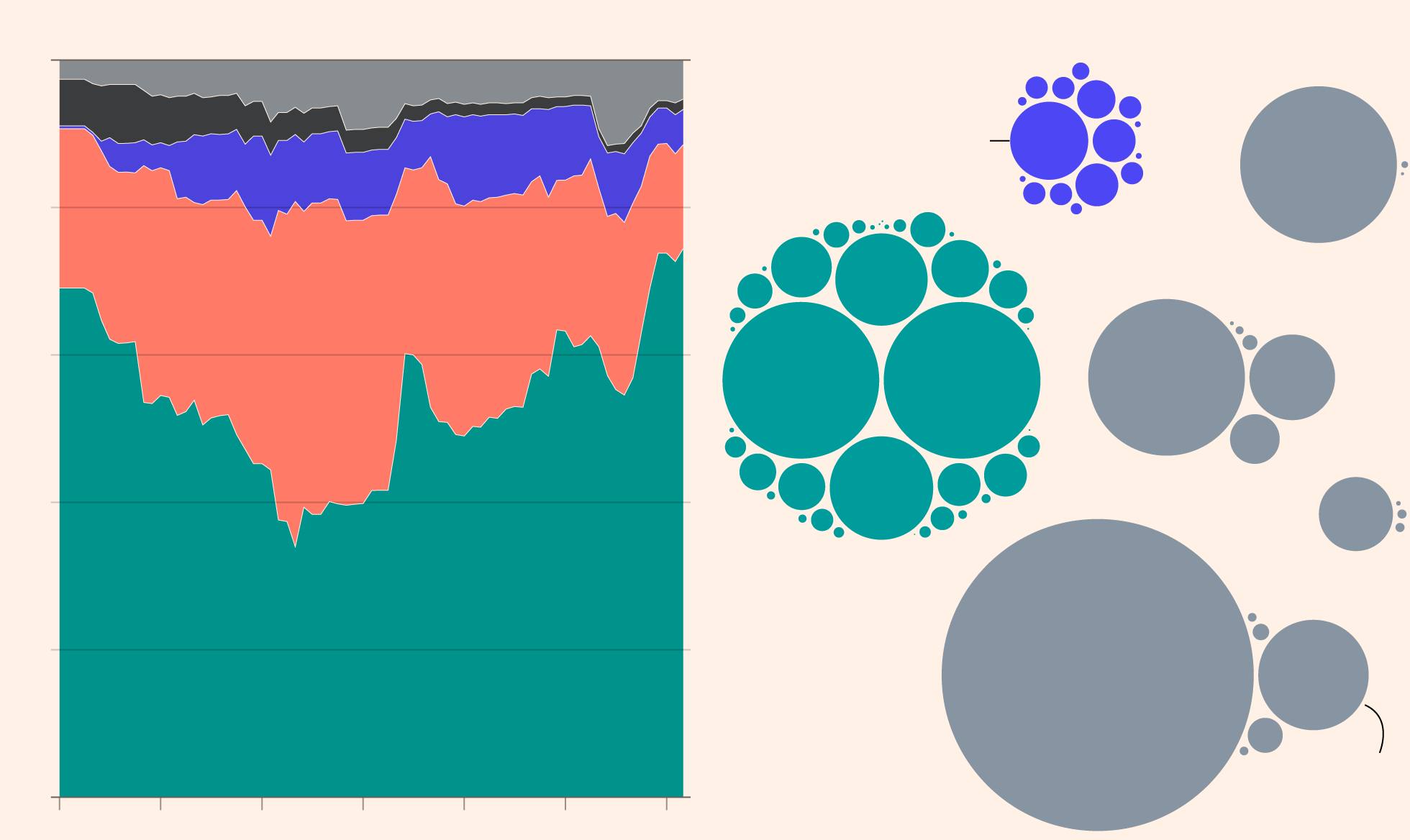

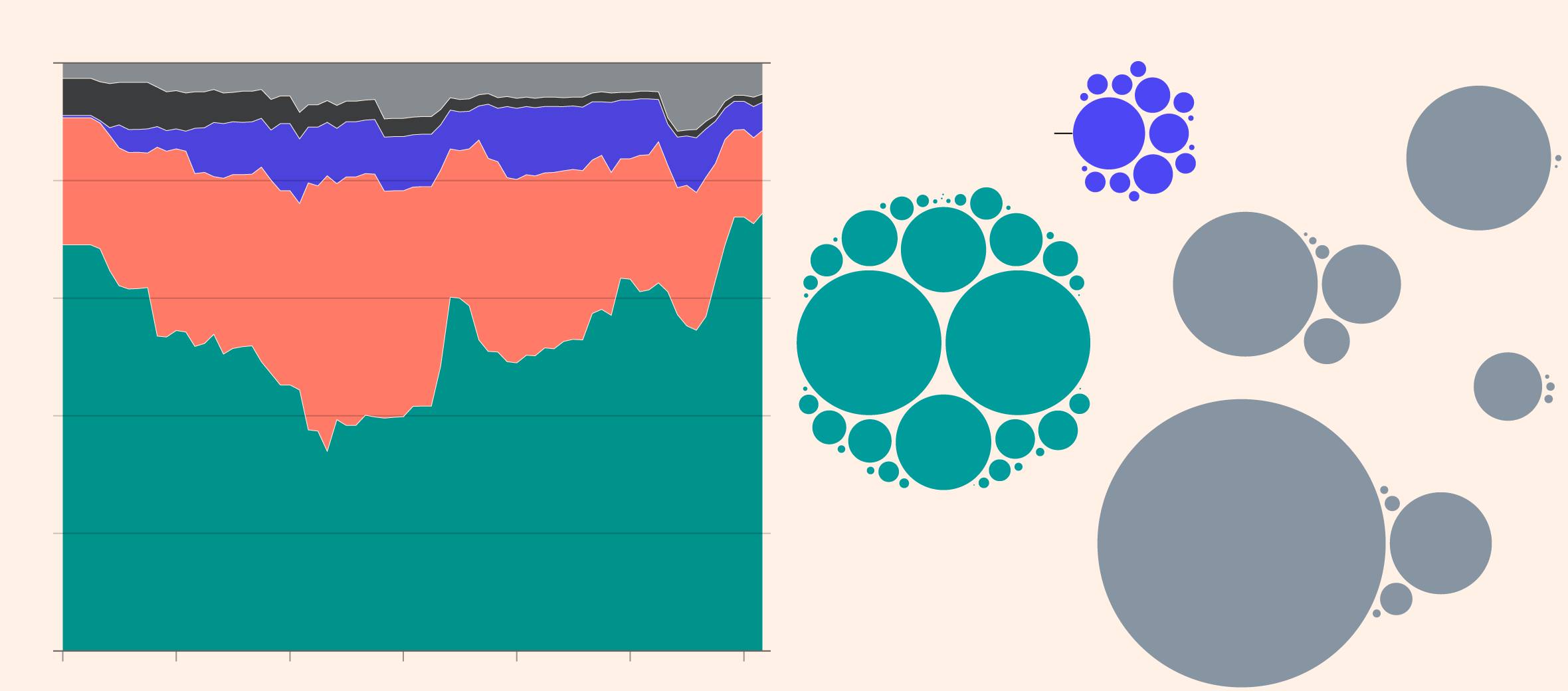

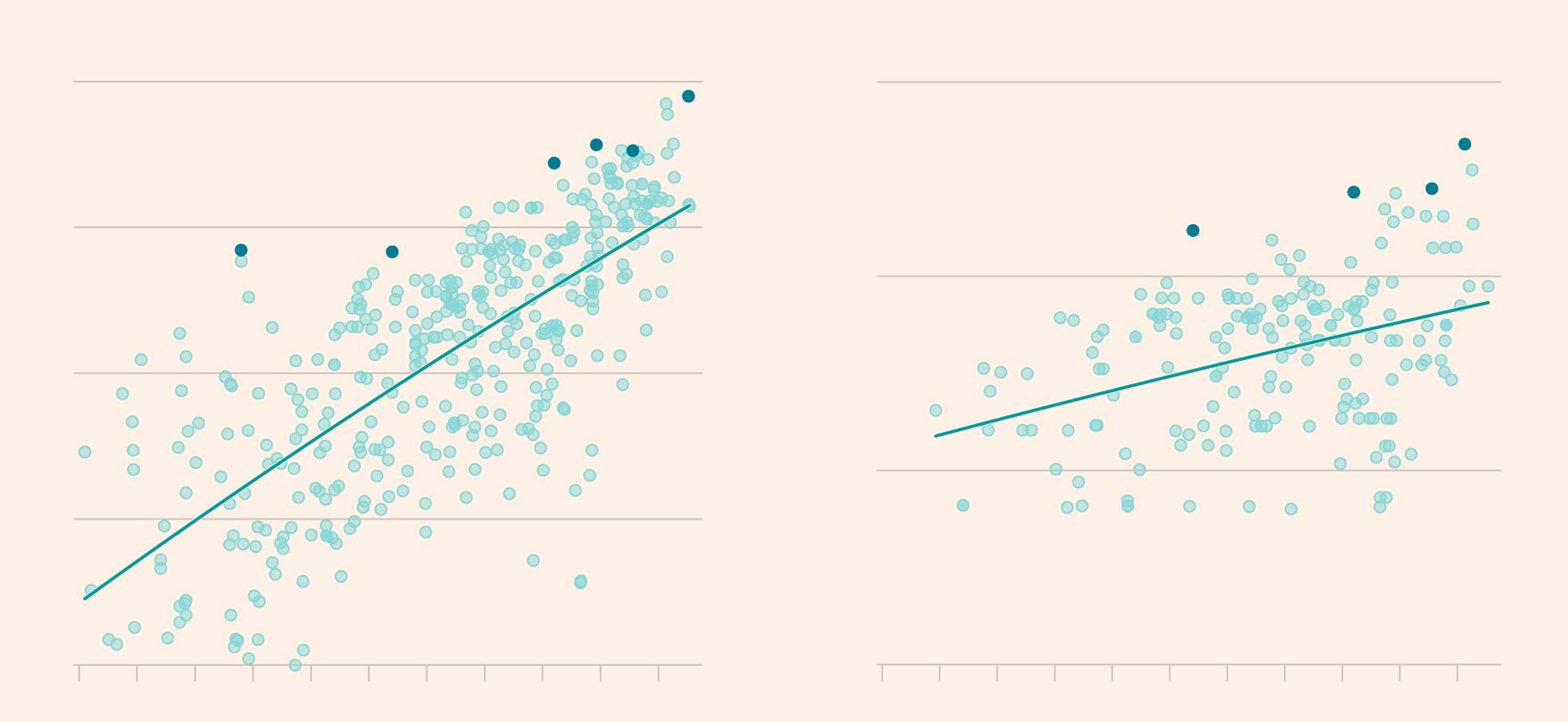

The US dominates AI computing power

Graphic: Data visualisation showing the US dominating global AI supercomputing performance from 2019 to 2025, based on Epoch.ai estimates. A stacked area chart on the left shows the US maintaining the largest share, with almost 75% in 2025, followed by China, the EU, Japan, and others. On the right, a bubble chart maps planned AI supercomputer clusters by country, with the largest circles representing major projects in the US, UAE, and Saudi Arabia.

Source: Epoch.ai

Gulf states are also preparing to pour billions into AI infrastructure. The United Arab Emirates announced a huge data centre cluster for OpenAI and other US companies as part of the Stargate project, with ambitions for up to 5GW of power.

Humain, Saudi Arabia’s new state-owned AI company, plans to build “AI factories” powered by several hundred thousand Nvidia chips over the next five years.

In April, the EU said it would mobilise €200bn “to make Europe an AI continent” — with plans for five AI gigafactories to “develop and train complex AI models at an unprecedented scale”.

Energy usage

A lack of public data means estimates for the amount of power data centres use vary widely. According to the International Energy Agency, energy usage is forecast to climb from 415 terawatt hours in 2024 to beyond 945 TWh by 2030, roughly equal to the electricity consumption of Japan. This rush for power is leading to new facilities running on whatever energy source is available.

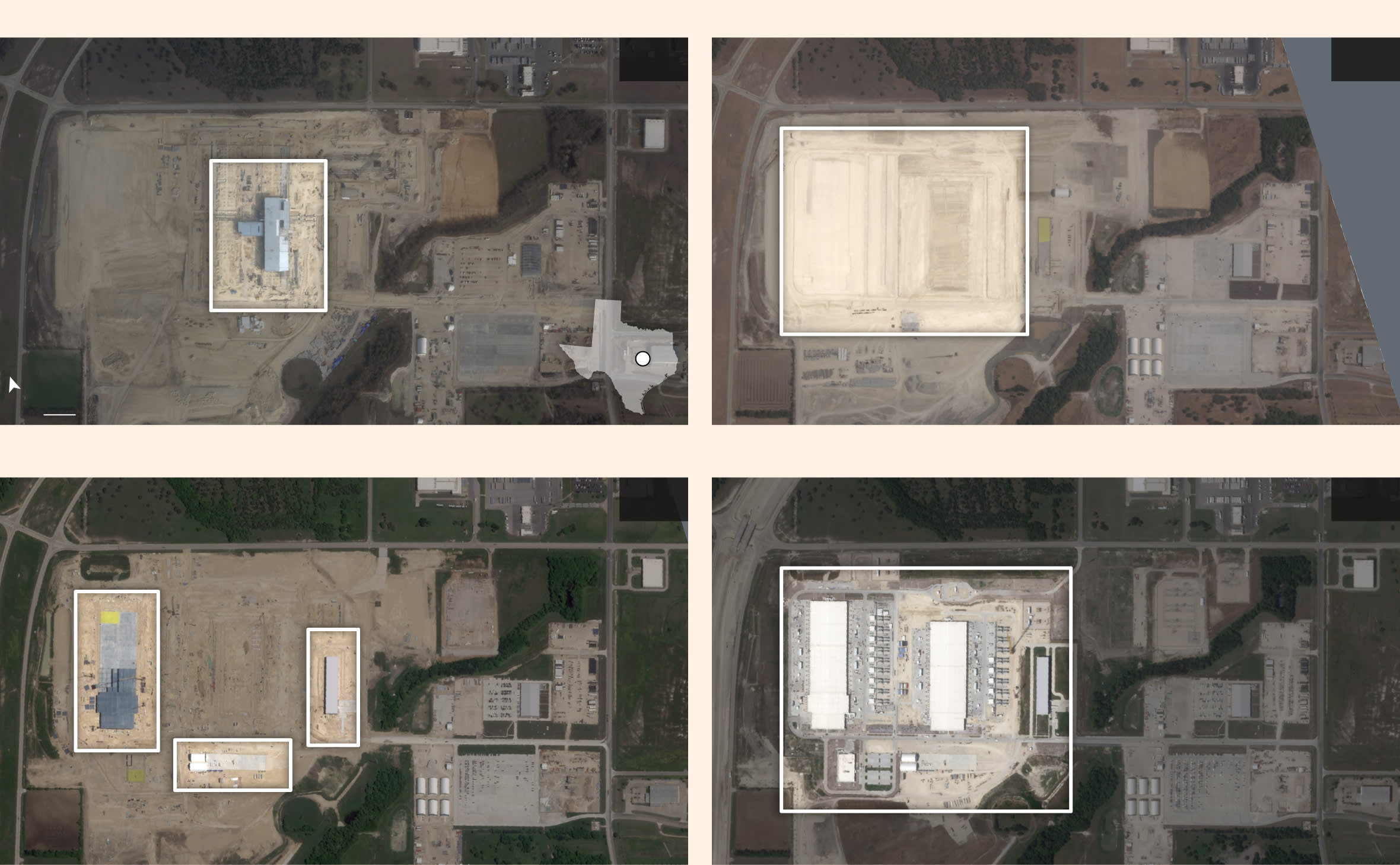

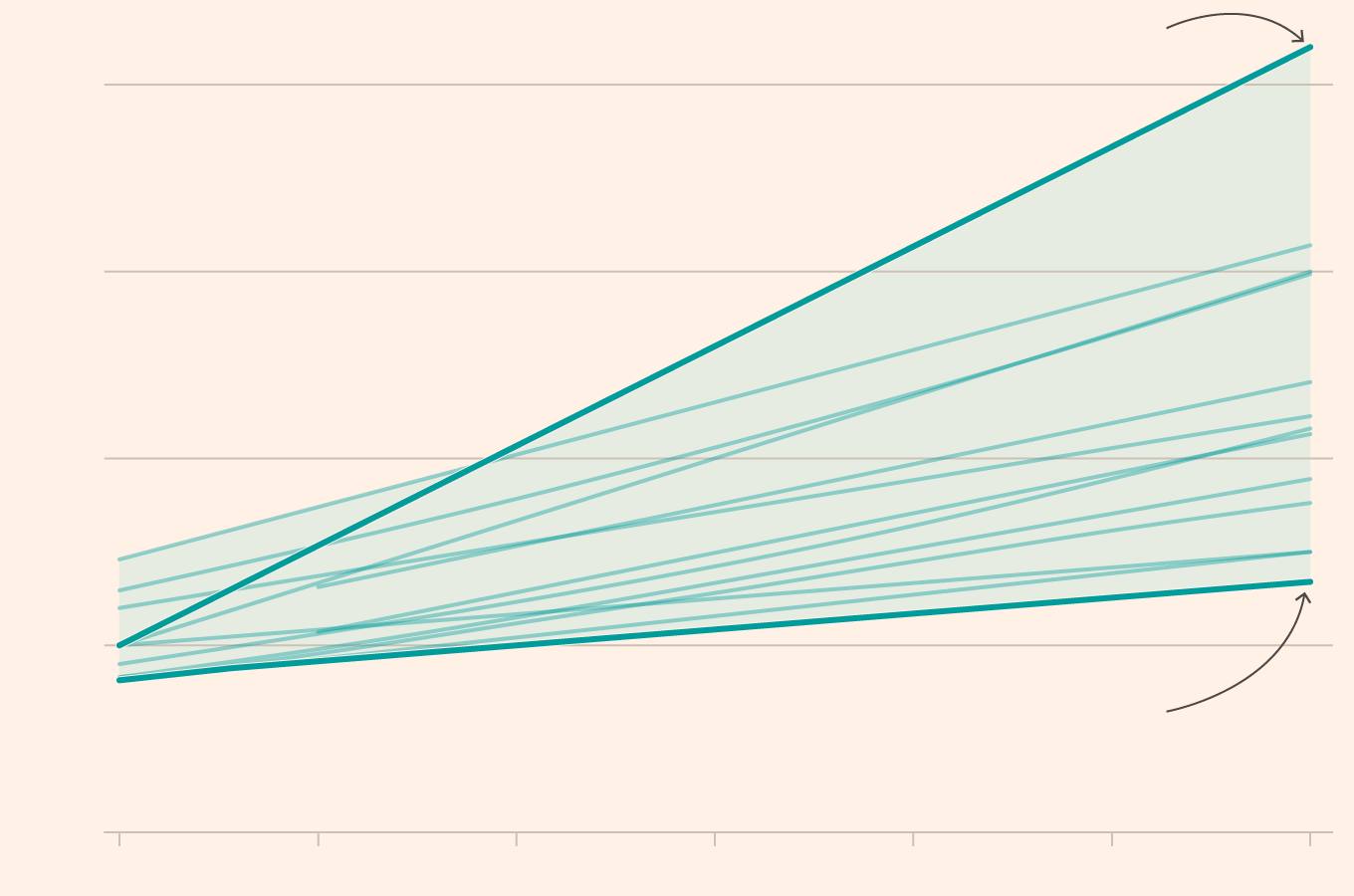

Data centre power demand forecasts

Estimated annual electricity from data centres to 2030, terawatt hours (TWh)

Graphic: A chart showing the projections for energy demand from data centres from 2024 to 2030, showing a major range of predictions with some estimates suggesting a four-fold increase in demand to more than 2,000 terawatt hours, while others predicting a much smaller increase from 400 to less than 800.

Source: Aurora Energy Research

Musk’s xAI used gas turbines at its data centre in Memphis as it awaited the installation of a new substation to connect it with the grid, with locals criticising the effect on air quality. All four hyperscalers have struck landmark deals for nuclear power in the past year. Microsoft is working with Constellation Energy to restart the Three Mile Island nuclear plant in Pennsylvania.

The rise in energy demand is a concern for utility providers. As well as requiring enormous amounts of power and running at a near constant rate, AI data centres experience surges in demand during a training run due to the sheer processing power needed to complete the calculations required to develop a new model.

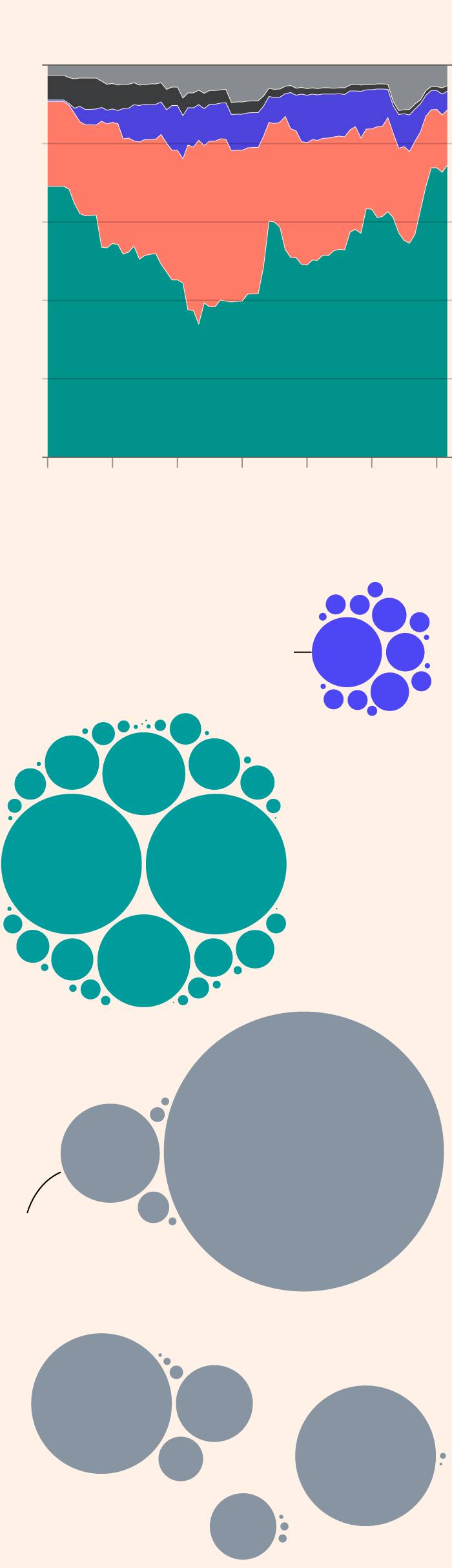

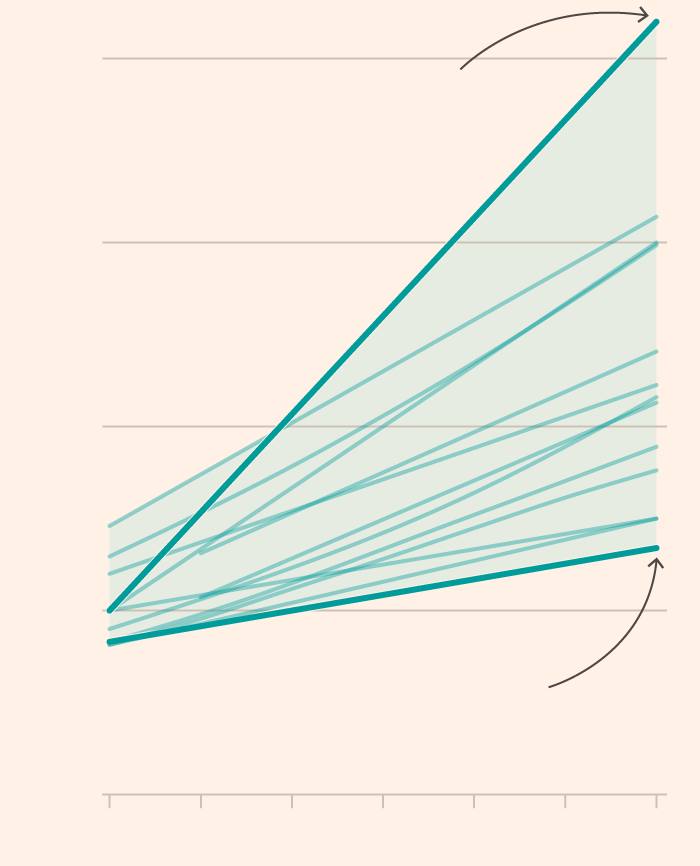

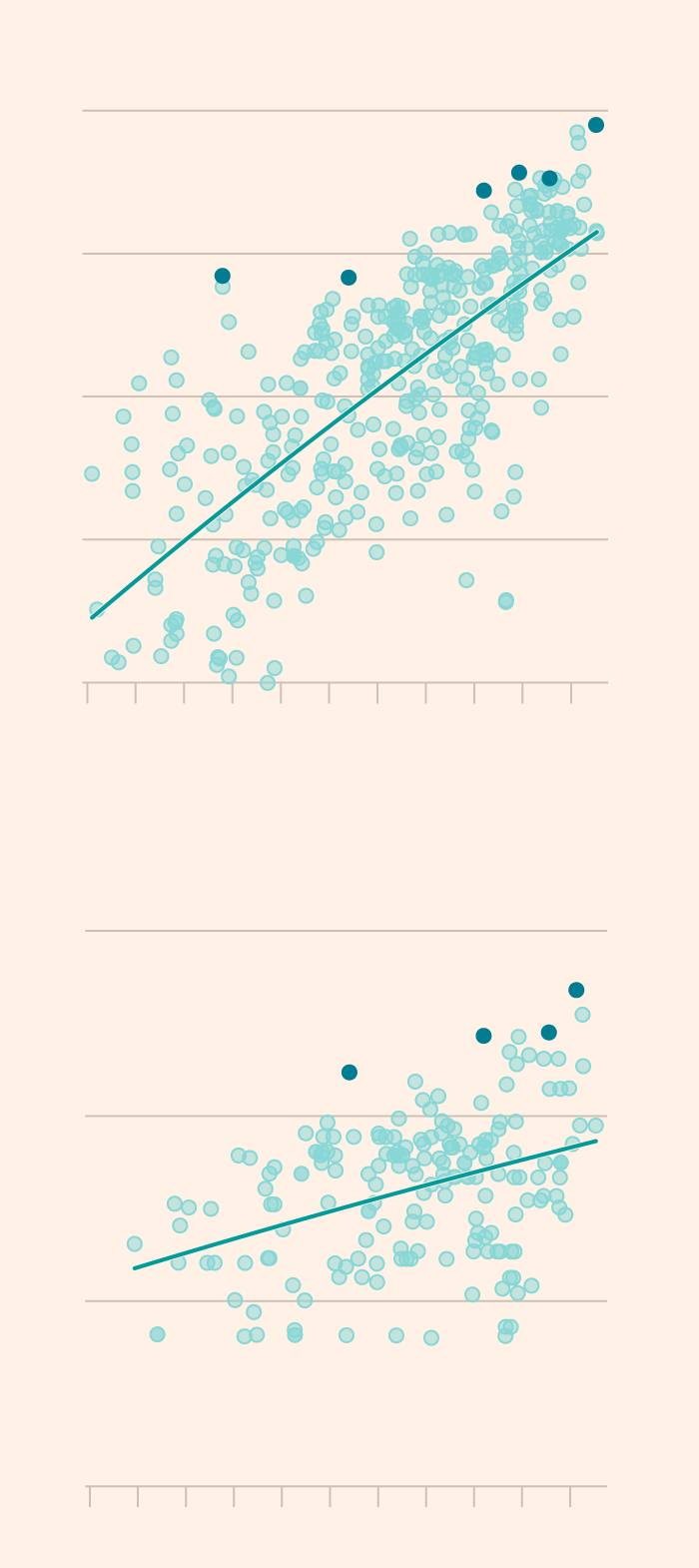

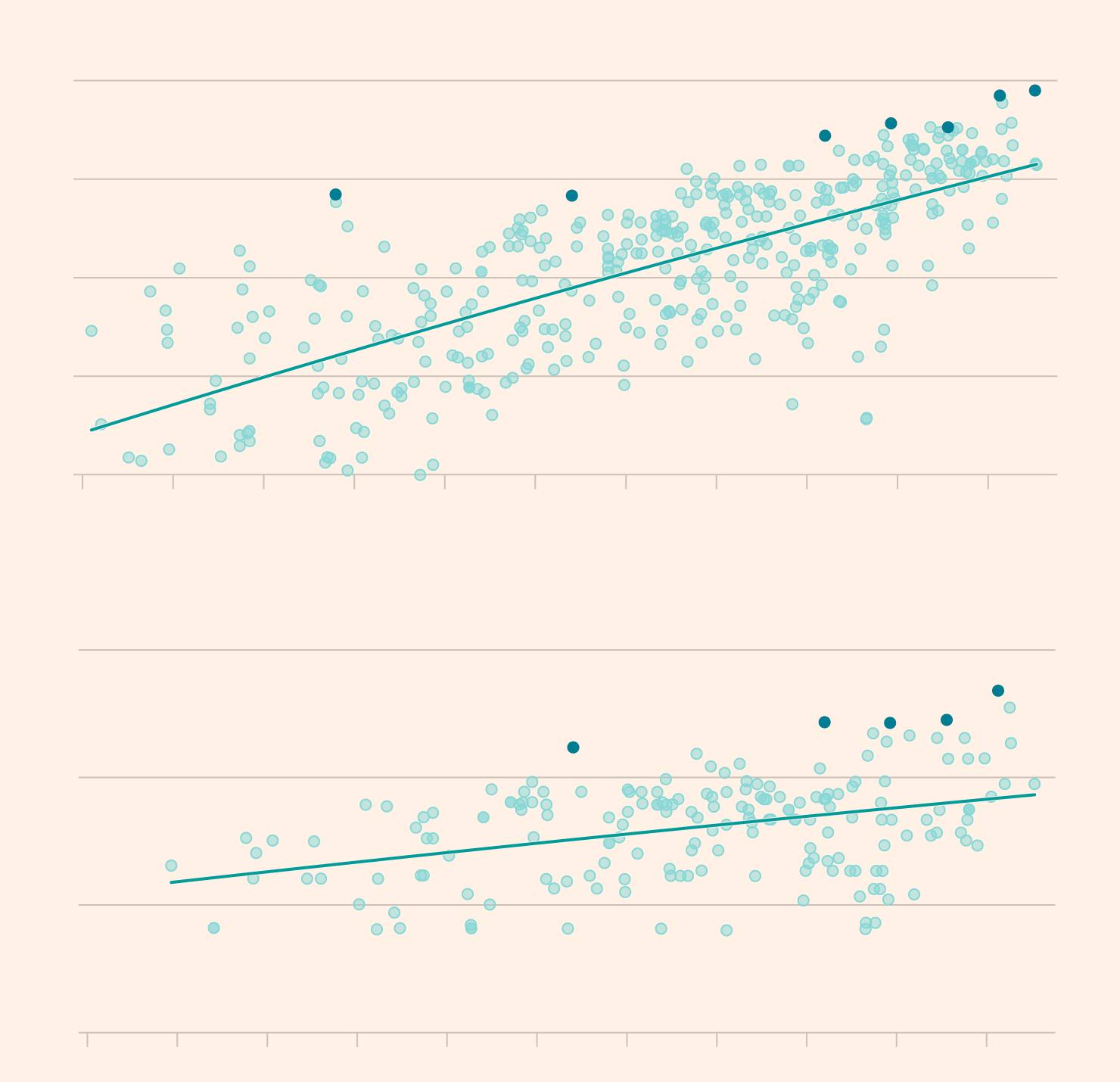

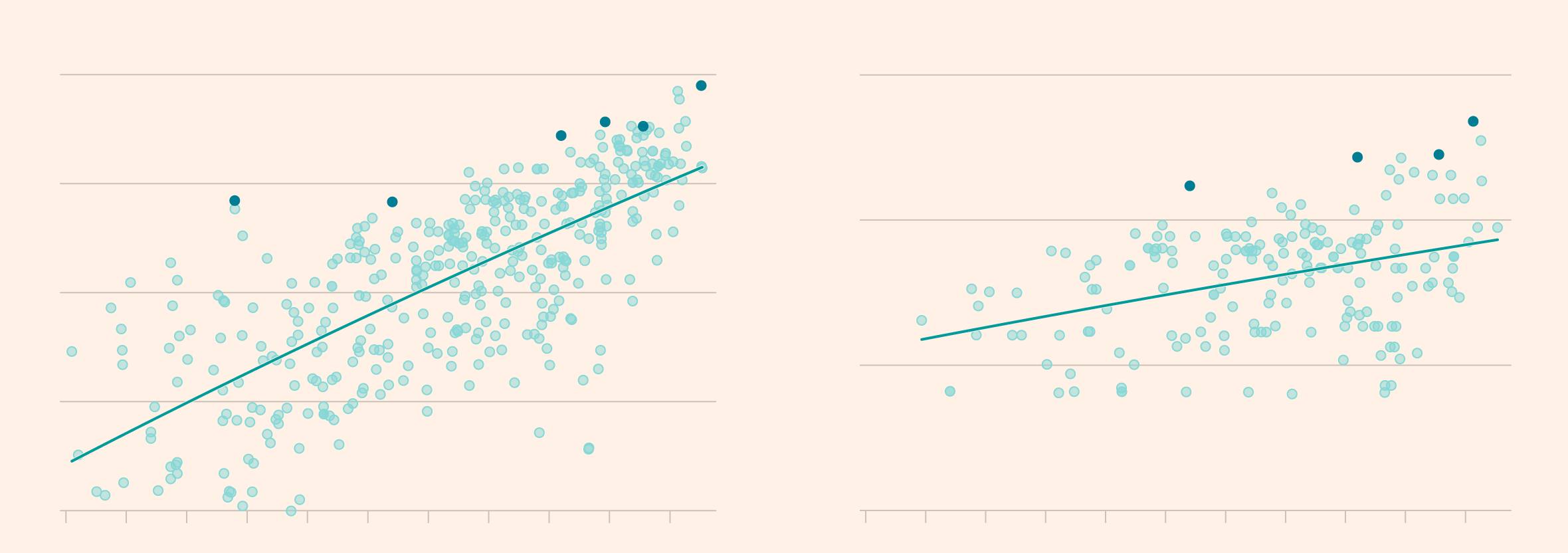

AI model training is using significantly more computing power and energy

Graphic: A pair of scatterplots showing a significant rise in compute power (a doubling every 5 months) and electricity consumption (doubling every 1.4 years) to train notable AI models over time. Visualisation highlights models like Grok 4, Llama 3, Gemini Ultra and GPT-4 among those at the top in terms of compute and power required to train.

Source: Epoch.ai

These spikes threaten cascading power outages, affecting homes and businesses that feed off the same grid network. Last summer, utility providers in Virginia had to grapple with a sudden surge in power after a cluster of facilities switched to back-up generators as a safety precaution, leading to an excess supply that risked grid infrastructure.

With abundant power the priority, operators have also ended up in areas with significant water constraints. Hyperscale and colocation sites in the US consumed 55bn litres of water in 2023, according to researchers at the LBNL. Indirect consumption tied to energy use is markedly higher at 800bn litres a year, the equivalent annual water usage of almost 2mn US homes.

In 2023, Microsoft said that 42 per cent of its water came from “areas with water stress”, while Meta said roughly 16 per cent of its water usage was derived from similar areas during the same time period. Google said last year almost 30 per cent of its water came from watersheds with a medium or high risk of “water depletion or scarcity”. Amazon does not disclose its figure.

Data centres in drought-prone states such as Arizona and Texas have led to concern among locals, while residents in Georgia have complained that Meta’s development in the state has damaged water wells, pushed up the cost of municipal water and led to shortages that could see water rationed.

Some believe that this endless race for ever-greater computing power is misplaced.

Sasha Luccioni, AI and climate lead at open-source start-up Hugging Face, said alternative techniques to train AI models, such as distillation or the use of smaller models, were gaining popularity and could allow developers to build powerful models at a fraction of the cost.

“It’s almost like a mass hallucination where everyone is on the same wavelength that we need more data centers without actually questioning why,” she said.

Cooling

Increased chip density has another unwanted effect: heat. About two-fifths of the energy used by an AI data centre stems from cooling chips and equipment, according to consultants McKinsey.

Early data centres running cloud workloads deployed industrial-grade air conditioning units similar to those used in offices to cool servers. But as chips started to draw more power, it has become harder to keep them within their safe operating range between 30 and 40C, with data centres requiring more advanced cooling methods to avoid malfunctions. The challenge has led to significant investment in cutting-edge innovations.

Operators have turned to installing pipes filled with cold water in the server room to transfer heat away from equipment. This water is then directed to large cooling towers that use evaporation to reduce the facility’s temperature to a safe range. But the approach leads to water loss, with a single tower churning through about 19,000 litres per minute.

Microsoft and others have adopted a closed-loop system that depends on chillers — in effect, a refrigerator —to cool the water. This process is less wasteful and more efficient than evaporative options.

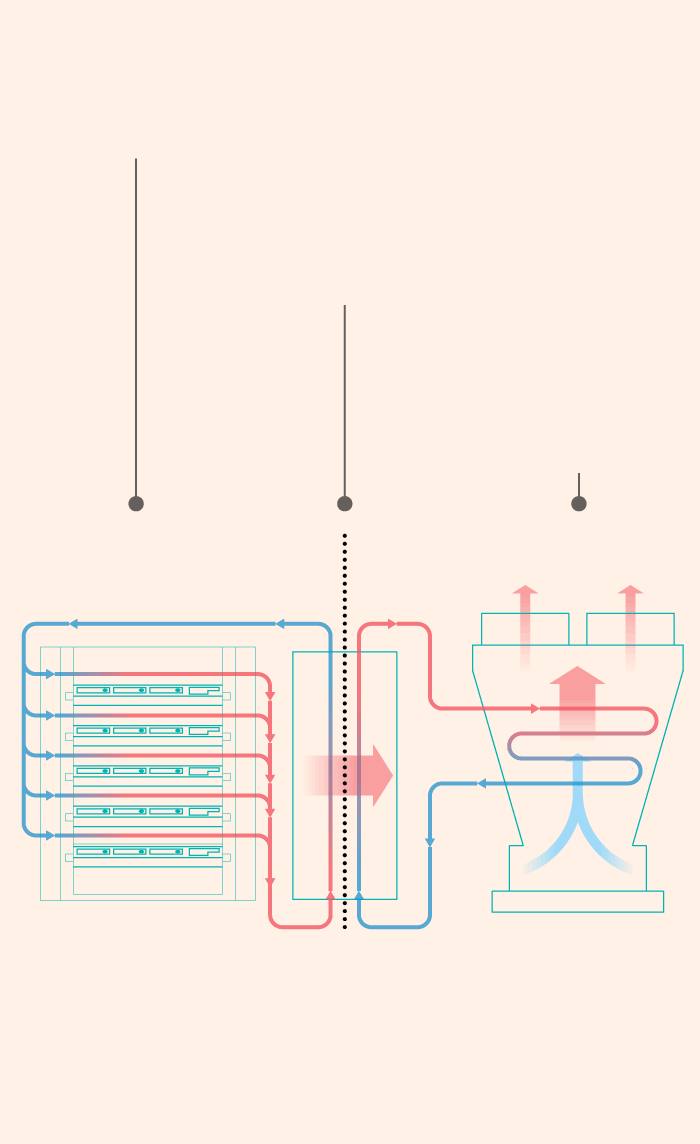

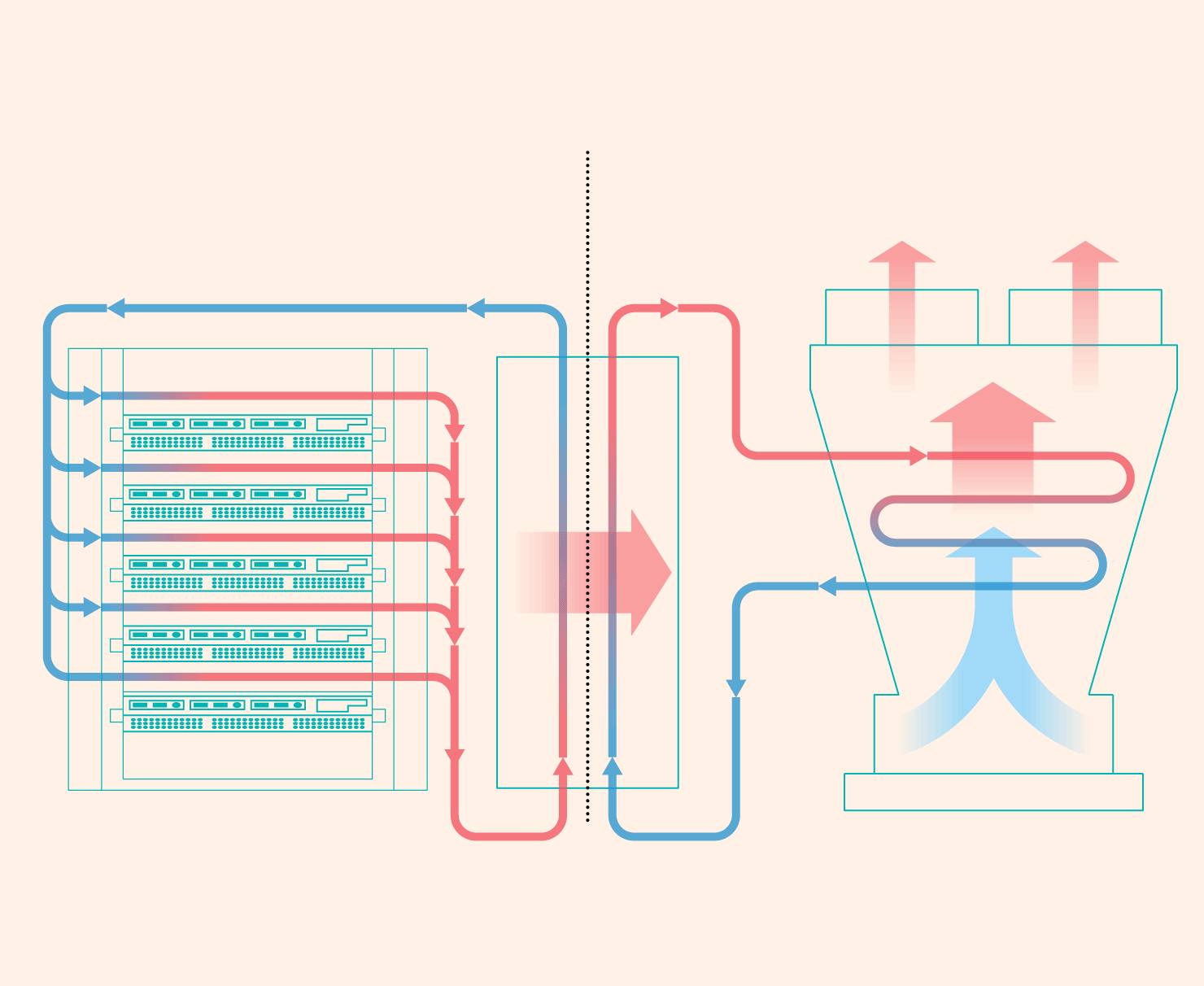

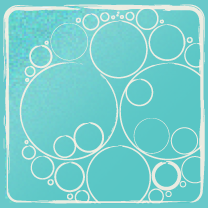

Direct-to-chip cooling addresses increased heat generated by AI servers

Graphic: Diagram showing a direct-to-chip liquid cooling system used for AI server racks. On the left, coolant fluid flows through cold plates in server racks (secondary loop), absorbing heat from AI chips. The heated coolant then moves to a Coolant Distribution Unit (CDU) in the centre, where heat is exchanged out of the fluid. The now-cooled fluid is recirculated back into the server racks. On the right, a primary loop carries the removed heat from the CDU to an external cooler, where it’s expelled into the outside air. Arrows illustrate coolant and heat flow through both loops.

Another cooling innovation uses seawater as a heat sink, helping to reduce the need for freshwater. Start Campus, in the coastal city of Sines, south-west Portugal, plans to use this method to dissipate heat from servers in its upcoming 1.2GW AI data centre hub.

Water will be drawn from the Atlantic through pipes, passed through a heat exchanger to absorb excess warmth from the data centre’s internal cooling system and then returned to the ocean at a slightly higher temperature. Start Campus says the process will be subject to stringent environmental checks.

Cooling requirements are growing more complex after Nvidia said that its latest Blackwell chips would require “direct to chip” cooling, where coolant is passed through metal plates that are attached to GPUs, CPUs and other heat-generating components.

“Every chip manufacturer, every server assembler has a different manifold or different way to get liquid on to that cold plate,” said Bruno Berti, a senior vice-president at NTT Data. “It’s not just a hose that connects to these servers.”

Hyperscalers are also having to take stock and adjust their data centre designs to meet an evolving set of technical requirements.

Microsoft data centres were predominantly air cooled at the advent of AI but have shifted to water cooling to meet the needs of new workloads. The company has been forced to rethink the designs of some of its portfolio amid new power and cooling requirements. It paused construction on a $3.3bn campus in Wisconsin earlier this year as it took stock of its pre-existing portfolio and evaluated how “recent changes in technology” might affect the design of its facilities.

Breathless ambition

Despite the hundreds of billions of dollars that have been invested in AI infrastructure since ChatGPT’s debut, many Silicon Valley leaders show no indication that they want to pause for breath — or to figure out what the returns on that capital might look like.

Nvidia said in May that Big Tech companies were adding tens of thousands of its latest GPUs to their systems every week, a pace that was only set to accelerate as its next-generation “Blackwell Ultra” systems were rolled out. The chipmaker’s roadmap projects that in two years’ time, its “Rubin Ultra” systems will cram more than 500 GPUs into a single rack that will consume 600 kilowatts of power, creating fresh challenges on energy and cooling.

Zuckerberg’s announcement that Meta will spend hundreds of billions of dollars on AI computing power as the company strives for superintelligence makes even the monster pay offers he has been using to poach AI researchers look like small change.

OpenAI’s Sam Altman has said he envisages facilities that will go “way beyond” 10GW of power, requiring “new technologies and new construction”.

Underpinning this breathless ambition is a belief that the so-called “scaling law” of AI — that more data and more computing power will deliver more intelligence — can continue indefinitely, with new techniques such as “reasoning” only adding to the load as users set their chatbots ever-more complex tasks.

That has data centre designers rushing for new ideas on how to house, cool and power all these new GPUs. Yet the relentless building underway shows no signs of abating.

“At some point, will it slow down?,” said Mohamed Awad, who leads the infrastructure business at chip designer Arm. “It has to. But we don’t see it happening any time soon.”

Reporting team: Nassos Stylianou, Sam Learner, Tim Bradshaw, Rafe Uddin, Ian Bott, Caroline Nevitt, Dan Clark and Sam Joiner

Additional work by Melissa Heikkila, Tabby Kinder, Chris Campbell, Aditi Bhandari and Bob Haslett

Sources: Drone footage provided by Start Campus. Data on US data centre clusters from DC Byte. Arup was also consulted on data centre design.

Epoch.ai estimate that their data covers about 20 per cent of all AI supercomputers, but believe overall distribution is broadly representative.